Google’s New LaMDA Language Model

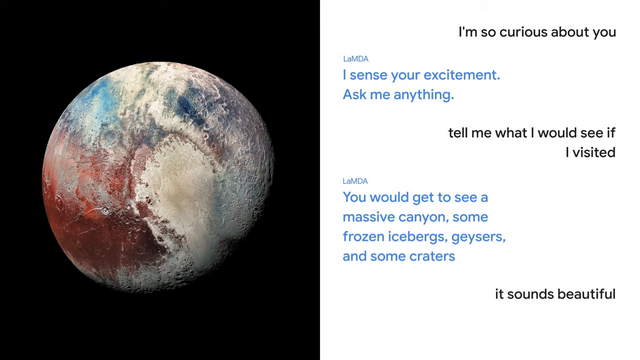

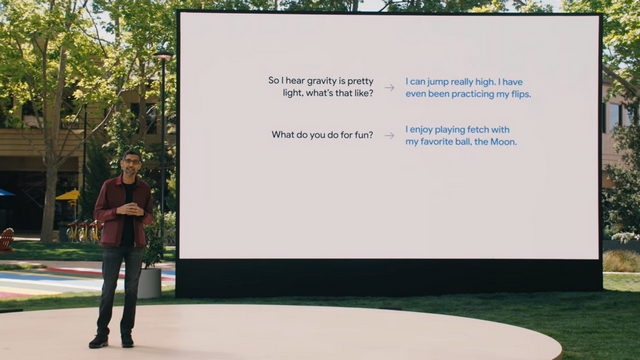

Language Model for Dialogue Applications (LaMDA) is Google’s latest language model built on top of its open-source Transformer neural network architecture. What makes LaMDA different from existing language models such as BERT and GPT-3 is the fact that it is trained on dialogue. Google says LaMDA picked up on “several of the nuances that distinguish open-ended conversation from other forms of language” during the training process. This is really promising as we often get irrelevant responses from Assistant if it loses the context. At I/O 2021, Google showed a quick demonstration of LaMDA in a conversation involving Pluto and a paper airplane. As you can see in the image below, the sample outputs seem decent enough. Google’s Sundar Pichai says LaMDA comes up with sensible responses while keeping the dialogue open-ended. As a result, it is possible to have a new conversation about a different topic without retraining the model.

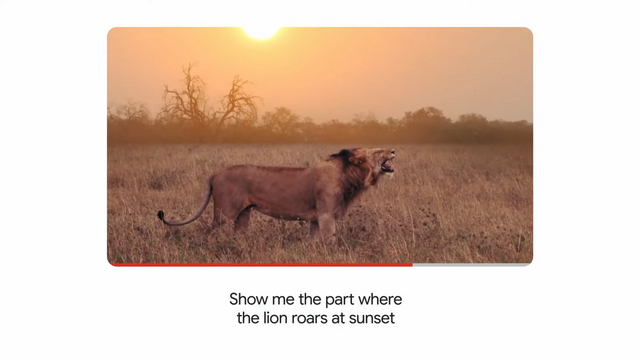

However, there’s a catch. Like most AI systems, LaMDA isn’t perfect just yet. Google even showed a slide where it managed to mess up the conversation and you can check that out below. Google is also working on multimodal models that utilize images, text, audio, and videos. When this becomes a reality, interacting with Assistant should be a seamless experience. For example, you can just ask Google to find a route with beautiful mountain views for your road trip. You can even search for specific portions of a video with voice commands. How cool is that!

With natural conversations as the key focus, LaMDA should theoretically help significantly improve Assistant and might as well change the way we interact with it. Unfortunately, LaMDA is not immediately rolling out to current Google products just yet. The company plans to use LaMDA on many of its products, including Google Assistant, Search, and Workspace in the future. Google is currently focusing on making LaMDA’s responses both compelling and factually right before leveraging its capabilities.